The digital services economy (including “AI”) is proving to be unexpectedly power-hungry. Grid operators have broadly been caught by surprise by the speed with which demand is growing. Analysts of the American power grid expect that data centres will dramatically alter the supply-demand dynamics of grid power in the five years ahead. If you will allow me an illustrative thought experiment: here, verbatim, are projections (with reference links) on that demand picture assembled through a single query using the LLM search engine “perplexity.ai”

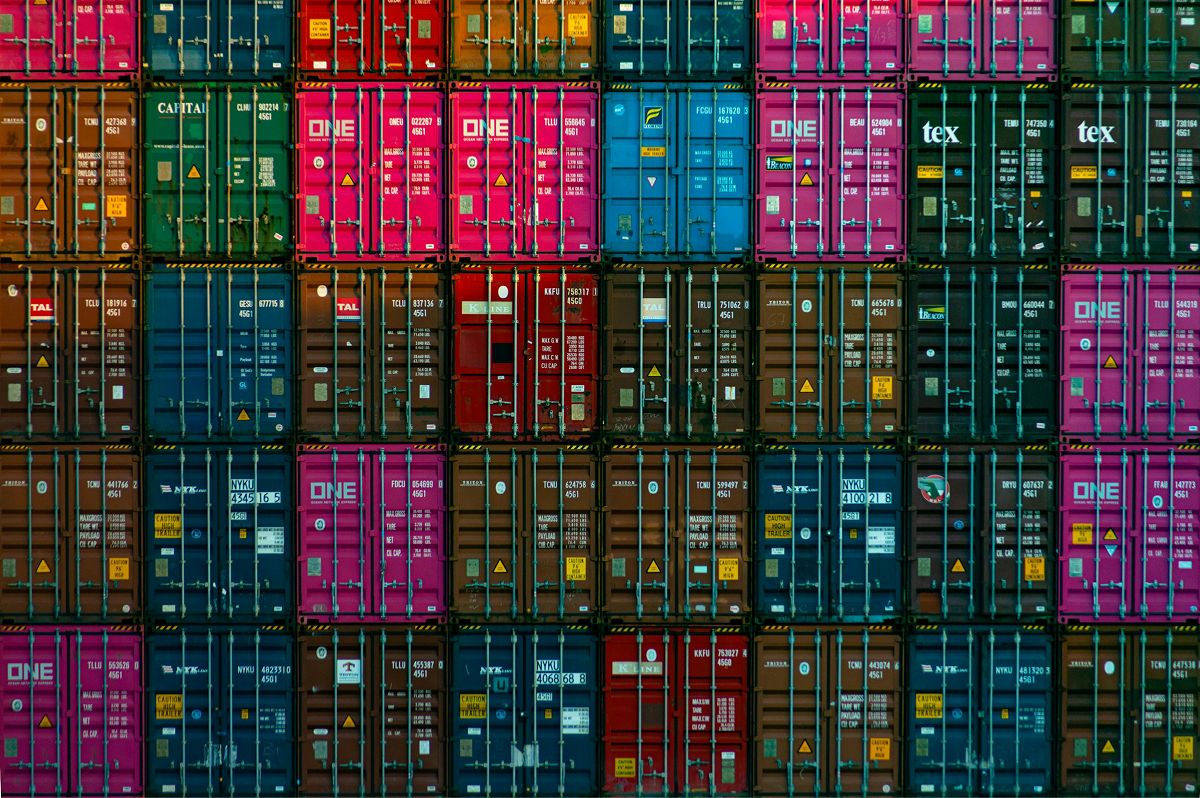

- ‘By 2030, data centers could account for 7.5-8% of total US electricity consumption, up from 2.5-3% currently[1][2].

- Between 2024 and 2030, electricity demand for data centers in the United States is projected to increase by about 400 terawatt-hours, growing at a compound annual growth rate (CAGR) of approximately 23%[3].

- Data center load may constitute 30-40% of all net new electricity demand added in the US until 2030[3].

- Some estimates suggest that data center power demand will account for up to 60% of total US load growth from 2023 through 2030[4].

- By 2028, third-party US data centers are estimated to represent 6.6% of US electricity consumption, a significant increase from 1.5% in 2018[5].

- This surge in data center electricity demand is primarily driven by the growth of artificial intelligence (AI) technologies, particularly generative AI, which requires substantial computing power. The increase in demand marks a shift from the previous era of relatively flat power demand growth in the US electricity sector.’

A second prompt using the same tool reveals the relative energy load of a search like this one, (ie using large language model algorithms) compared to a more conventional search engine query:

“The energy cost of performing a single search on Perplexity AI is estimated to range between 0.0005 and 0.005 kWh, depending on the complexity of the query and the model’s computational requirements.[6] This is approximately 10–15 times more energy than a traditional Google search, which consumes around 0.0003 kWh per query[7][8][6].

For context, this energy usage is lower than some other AI-powered models like ChatGPT, which can consume up to 0.01 kWh per query7. The higher energy consumption in AI searches compared to traditional search engines is due to the computational intensity of running deep neural networks across billions of parameters to generate responses dynamically.”[9][8]

Given the geo-strategic importance assigned to AI, the economic importance of digital services in the US export and domestic economy, the appetite of investors to participate in the AI boom, and the broadening practical applications of these tools (as illustrated above) – the trend towards AI-driven electricity demand looks all but locked-in.

For people who use a lot of electricity, the question is worth asking – is this data-centre demand one that will be offset by other factors, or does it represent part of a broader secular demand upswing. That’s the subject we’ll take up in part 3 in this series.

To be continued…

[1] The Era of Flat Power Demand is Over – Grid Strategies

[2] AI is poised to drive 160% increase in data center power demand – Goldman Sachs

[3] How data centers and the energy sector can sate AI’s hunger for power – McKinsey and Company

[4] Breaking Barriers to Data Center Growth – BCG

[5] Data Centers Part II: Power Constraints – The Path Forward – TD Securities

[7] How Much Energy Do Google Search and ChatGPT Use? – RW Digital

[8] Post by Matt Carson – LinkedIn

[9] How Your AI Queries are Putting Carbon Back in the Air – Outlook Planet